Security Dangers of Over Confidence

The state of web application security is somewhat scattered as organizations are deploying multiple solutions without a clear strategy to determine who is ultimately responsible to drive decision-making.

Surprisingly, organizations do not recognize that this scattered approach still leave their organizations vulnerable to attack, and confidence remained high among respondents’ ability to recognize bad bot traffic and detect threats in their networks.

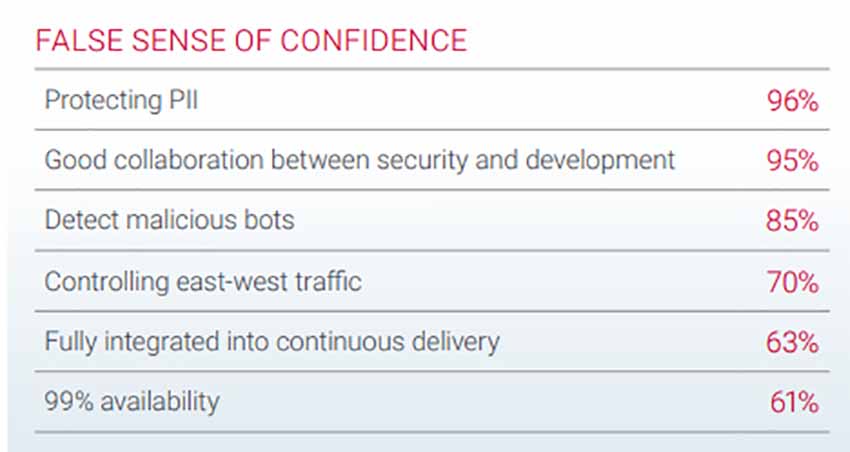

A False Sense of Confidence

Those responsible for IT security in the organisation feel doing a good job distinguishing between good and bad bots on their networks, yet bad bots continue to be significant and evolving security threat. It’s been noticed that bad bots accounted for more than 40% of the total traffic to applications on their networks.

A survey shows the following:

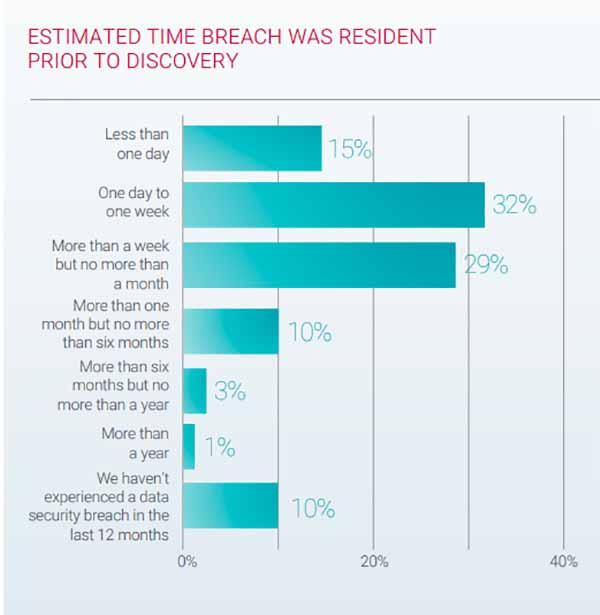

Ninety percent of organizations that experienced a breach in the past 12 months believed that dwell time in their networks was one month or less. This time span is much shorter than what two recent large empirical studies determined was the average time that breaches were resident in networks before discovery.

IBM Security’s 2019 Cost of a Data Breach report found that the average time to identify and contain a breach was 279 days, or about nine months. The Verizon 2019 Data Breach Investigations Report stated that discovery of a breach was “likely to be months” and was “very dependent on the type of attack in question.”

Confidence also extended to organizations that employed multiple cloud providers. Seventy-one percent of respondents felt that they could enforce the same level of security across all hosted applications.

Attacks Still Find a Way

Even though the respondents expressed confidence in their organizations’ capabilities to protect applications either on-premise or in hosted environments, attacks were still successful. Hackers seemed to love the challenge that new technologies introduced. They employed many tools to scan and map applications to identify vulnerabilities.

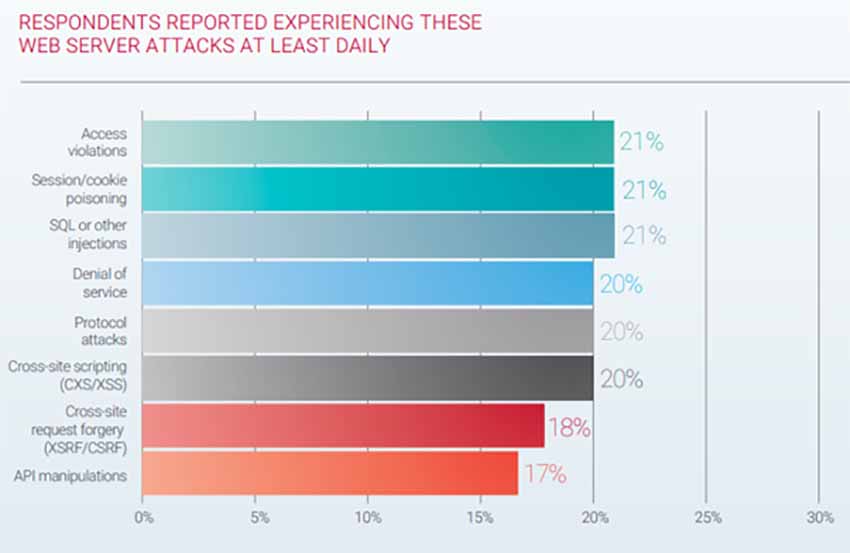

In addition to new and better ways to meet customer demand for relevant interactions with brands, emerging technologies also offered up a wider attack surface and new exposure to threats. Indeed, respondents indicated that they were under ongoing attacks on a variety of fronts.

Web application attacks: For many years, SQL injection (SQLi) and cross-site scripting (XSS) attacks have been the most prevalent attack types. Recently there was a rise in API manipulations and session cookie poisoning in both overall quantity and frequency. Access violations were the most common attack type overall.

API attacks: Access violations, which are the misuse of credentials, and denial of service (DoS) are the most common daily API attacks reported in the survey. Other threats included injections, data leakage, element attribute manipulations, irregular JSON/XML expressions, protocol attacks and Brute Force. Gartner predicts that, by 2022, API abuses will move from an infrequent to the most frequent attack vector, resulting in data breaches for enterprise web applications.

Bot attacks: Although web scraping is the most common attack overall, account takeover, DoS and payment abuse were the most common bot attacks and occurred daily.

Application Denial-of-Service attacks: Twenty percent of organizations experienced DoS attacks on their application services every day. Buffer overflow was the most common attack type.

Nikhil Taneja

anaging Director-India, SAARC & Middle East, Radware

See What’s Next in Tech With the Fast Forward Newsletter

Tweets From @varindiamag

Nothing to see here - yet

When they Tweet, their Tweets will show up here.